The Modern Developer’s Guide to Creating an Intelligent News Aggregator

In today’s fast-paced digital world, information overload is a significant challenge. Staying updated requires sifting through countless sources, a task that is both time-consuming and inefficient. This is where automated news rollup systems come into play—intelligent applications that aggregate, process, and present information in a digestible format. Building such a system from the ground up is an excellent project for showcasing the power and versatility of the modern JavaScript ecosystem, from backend processing with Node.js to dynamic frontends built with frameworks like React or Vue, all optimized by powerful bundlers like Rollup.js.

This article provides a comprehensive technical guide to building an automated news rollup system. We will explore the complete lifecycle of the project, starting with a robust Node.js backend for fetching and parsing data from various sources like RSS feeds. We’ll then integrate Artificial Intelligence (AI) to intelligently summarize articles, transforming raw data into concise insights. Finally, we’ll construct a sleek, responsive frontend and discuss the critical role of build tools like Rollup and Vite in optimizing performance and developer experience. Along the way, we’ll cover best practices, testing strategies, and advanced techniques to create a production-ready application.

Section 1: Architecting the Backend for Data Aggregation

The foundation of our news rollup system is a reliable backend responsible for fetching, parsing, and storing news articles from multiple sources. Node.js is an ideal choice for this task due to its non-blocking I/O model, which excels at handling concurrent network requests. While the latest Node.js News continues to bring performance improvements, alternatives like Deno News and the incredibly fast Bun News are also gaining traction for similar I/O-bound tasks.

Choosing a Framework and Fetching Data

To structure our backend application, we’ll use a web framework. Express.js News shows it remains a popular and minimalist choice, but modern alternatives are worth considering. The latest Koa News offers a more modern approach using async/await, while Fastify News is renowned for its exceptional performance and low overhead. For larger, more complex applications, frameworks like NestJS News or AdonisJS News provide a more opinionated, modular architecture.

For this example, we’ll use Express.js and the rss-parser library to fetch and parse RSS feeds—a common format for news distribution.

First, set up a new Node.js project:

npm init -y

npm install express rss-parser corsNext, we can create a simple server that exposes an endpoint to fetch news from a predefined list of RSS feeds. This script will iterate through the URLs, parse the feeds, and return a consolidated list of articles.

// server.js

const express = require('express');

const Parser = require('rss-parser');

const cors = require('cors');

const app = express();

const parser = new Parser();

const PORT = process.env.PORT || 3001;

app.use(cors()); // Enable CORS for frontend requests

const RSS_FEEDS = [

'http://rss.cnn.com/rss/cnn_topstories.rss',

'https://feeds.bbci.co.uk/news/world/rss.xml',

// Add more RSS feed URLs here

];

// Endpoint to fetch and roll up news

app.get('/api/news', async (req, res) => {

try {

const allArticles = [];

// Use Promise.all to fetch feeds concurrently

await Promise.all(RSS_FEEDS.map(async (feedUrl) => {

try {

const feed = await parser.parseURL(feedUrl);

feed.items.forEach(item => {

allArticles.push({

title: item.title,

link: item.link,

pubDate: item.pubDate,

contentSnippet: item.contentSnippet,

source: feed.title,

});

});

} catch (error) {

console.error(`Failed to fetch or parse feed: ${feedUrl}`, error);

}

}));

// Sort articles by publication date, newest first

allArticles.sort((a, b) => new Date(b.pubDate) - new Date(a.pubDate));

res.json(allArticles);

} catch (error) {

console.error('Error fetching news feeds:', error);

res.status(500).json({ message: 'Failed to fetch news' });

}

});

app.listen(PORT, () => {

console.log(`Server running on http://localhost:${PORT}`);

});This script provides a solid foundation. In a real-world application, you would add a database (like MongoDB or PostgreSQL) to store the articles, preventing duplicate entries and creating a historical archive. You would also implement a cron job or scheduler to fetch news periodically rather than on every API request.

Section 2: Integrating AI for Intelligent Summarization

To elevate our news rollup from a simple aggregator to an intelligent assistant, we can use AI to generate concise summaries of each article. This adds immense value by allowing users to quickly grasp the key points of a story without reading the full text. We can achieve this by integrating with a Large Language Model (LLM) API, such as one from OpenAI, Anthropic, or Google.

Setting Up the Summarization Service

The process involves taking the content of an article (which often requires a web scraping step to extract from the article link) and sending it to the AI model with a specific instruction, or “prompt,” to summarize it. Python is a popular choice for AI tasks due to its rich ecosystem of libraries, but this can also be accomplished in Node.js.

Here’s an example using Python and the OpenAI library. First, install the necessary packages:

pip install openai beautifulsoup4 requestsNext, we can create a function that scrapes the article content and sends it to the GPT model for summarization. Note: this requires an API key from OpenAI.

# a_summarizer.py

import os

import requests

from bs4 import BeautifulSoup

from openai import OpenAI

# It's best practice to set the API key as an environment variable

client = OpenAI(api_key=os.environ.get("OPENAI_API_KEY"))

def scrape_article_text(url):

"""Scrapes the main text content from a news article URL."""

try:

response = requests.get(url, headers={'User-Agent': 'Mozilla/5.0'}, timeout=10)

response.raise_for_status()

soup = BeautifulSoup(response.content, 'html.parser')

# This is a simplistic approach; robust scraping requires more specific selectors

paragraphs = soup.find_all('p')

article_text = ' '.join([p.get_text() for p in paragraphs])

return article_text

except requests.RequestException as e:

print(f"Error scraping {url}: {e}")

return None

def summarize_text_with_ai(text):

"""Sends text to OpenAI API for summarization."""

if not text or len(text) < 100: # Don't summarize very short texts

return "Content too short to summarize."

try:

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant that summarizes news articles into 2-3 concise sentences."},

{"role": "user", "content": f"Please summarize the following article text:\n\n{text[:4000]}"} # Truncate to fit model context limit

],

temperature=0.5,

max_tokens=150

)

summary = response.choices[0].message.content.strip()

return summary

except Exception as e:

print(f"Error with AI summarization: {e}")

return "Summary not available."

# Example Usage

if __name__ == "__main__":

article_url = 'https://www.bbc.com/news/world-us-canada-68032338' # Replace with a real, current URL

article_content = scrape_article_text(article_url)

if article_content:

summary = summarize_text_with_ai(article_content)

print("--- SUMMARY ---")

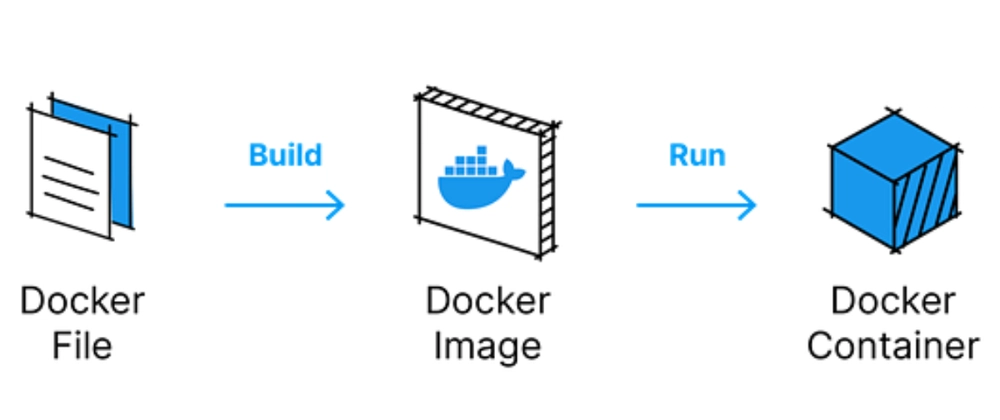

print(summary)This Python script can be exposed as a separate microservice that the main Node.js backend calls. This separation of concerns keeps the AI logic isolated. Key considerations here include handling API rate limits, managing costs, and refining the prompt to get the desired summary style and length. Robust web scraping is also a complex challenge, as each news site has a different HTML structure. Libraries like `trafilatura` can offer more reliable content extraction.

Section 3: Building the Frontend with Modern Bundlers

With the backend serving our aggregated and summarized news, we need a frontend to display it. The modern JavaScript landscape offers a plethora of choices. The latest React News shows its continued dominance, but Vue.js News and Svelte News are fantastic, powerful alternatives. For those coming from an enterprise background, Angular News remains a strong contender. Newer libraries covered in SolidJS News and Lit News are pushing the boundaries of performance with fine-grained reactivity.

The Role of Rollup.js and Vite

Regardless of the framework, we need a build tool to bundle our code for the browser. This is where tools like Rollup.js shine. The latest Rollup News highlights its focus on efficiency and standards. Rollup was one of the first bundlers to popularize tree-shaking—a process that eliminates unused code—resulting in smaller, faster bundles. It natively outputs ES Modules (ESM), aligning with modern browser capabilities.

While you can configure Rollup directly, many developers now use higher-level tools that use Rollup under the hood. The biggest player in this space is Vite. The latest Vite News confirms its meteoric rise in popularity. Vite uses native ES Modules during development for lightning-fast server starts and Hot Module Replacement (HMR). For production, it uses Rollup to create highly optimized static assets. This gives developers the best of both worlds. It stands in contrast to older tools like Webpack News, which, while incredibly powerful and configurable, often require more complex setup.

Here is a simplified example of a `rollup.config.js` file for a basic JavaScript project. It shows how to specify an input file, an output format, and use plugins (like `@rollup/plugin-node-resolve` to handle npm packages).

// rollup.config.js

import { nodeResolve } from '@rollup/plugin-node-resolve';

import commonjs from '@rollup/plugin-commonjs';

import terser from '@rollup/plugin-terser';

export default {

input: 'src/main.js', // Your application's entry point

output: {

file: 'dist/bundle.js',

format: 'iife', // Immediately Invoked Function Expression - good for browsers

sourcemap: true,

},

plugins: [

nodeResolve(), // Helps Rollup find modules in node_modules

commonjs(), // Converts CommonJS modules to ES6

terser() // Minifies the output bundle for production

]

};This configuration demonstrates the core concept of bundling. However, for a framework like React, you’d also need plugins for transpilation (like `@rollup/plugin-babel` or `@rollup/plugin-swc`) to handle JSX. This is why tools like Vite, which pre-configure all of this, are so popular. Keeping up with Babel News and the faster SWC News is crucial for optimizing build pipelines.

Section 4: Best Practices, Testing, and Optimization

Building a functional application is just the first step. To create a robust and maintainable system, we must adhere to best practices, implement a comprehensive testing strategy, and continuously optimize for performance.

Code Quality and Tooling

Maintaining a clean and consistent codebase is essential, especially in a team environment. Tools like ESLint and Prettier are non-negotiable. The latest ESLint News often includes new rules for modern JavaScript features, while Prettier News ensures uniform code formatting across the project. Integrating these tools into your CI/CD pipeline ensures that all code meets quality standards before being merged.

Using TypeScript News to add static typing to your JavaScript project can also dramatically reduce bugs and improve developer experience by providing autocompletion and type-checking in your editor.

Comprehensive Testing Strategy

A solid testing strategy should cover all parts of the application.

- Unit Tests: Use frameworks like Jest, Vitest, or Mocha to test individual functions in isolation. The latest Jest News and Vitest News show a trend towards faster, ESM-native test runners.

- Integration Tests: Test the interactions between different parts of your system, such as verifying that the backend API correctly fetches and summarizes news when called.

- End-to-End (E2E) Tests: Use tools like Cypress, Playwright, or Puppeteer to simulate real user interactions in a browser. Keeping up with Cypress News and Playwright News is key to leveraging the latest features for browser automation and testing complex user flows.

Performance Optimization

Optimization is an ongoing process. On the backend, implement caching with a tool like Redis to store the results of expensive operations like API calls and AI summaries. This reduces latency and saves costs. On the frontend, leverage code-splitting to load JavaScript only when it’s needed. Bundlers like Rollup and Webpack make this straightforward. Also, ensure you are compressing images and using modern formats like WebP. For applications with complex graphics or visualizations, staying updated on Three.js News or PixiJS News can provide performance benefits.

Conclusion: Putting It All Together

We have journeyed through the process of building a sophisticated, AI-powered news rollup system using the modern JavaScript ecosystem. We started by architecting a resilient Node.js backend for data aggregation, enhanced its capabilities with AI-driven summarization, and built a user-friendly frontend optimized by the powerful bundling capabilities of Rollup.js and Vite. Along the way, we emphasized the importance of best practices, from code quality and static typing with TypeScript to a multi-layered testing strategy.

This project demonstrates that the JavaScript ecosystem provides all the tools necessary to build complex, intelligent, and high-performance applications. The constant evolution seen in Rollup News, Vite News, and the frameworks they support ensures that developers have access to cutting-edge technology. The next step is to take these concepts and expand upon them—perhaps by adding user authentication, personalized news feeds, or deploying the application to a serverless architecture. The possibilities are endless.